Introducing Cloudflow

Cloudflow is an SDK and a set of Kubernetes extensions that accelerates your development of distributed streaming applications and facilitates their deployment on Kubernetes.

The rapid advance and adoption of the Kubernetes ecosystem is delivering the devops promise of giving control and responsibility to dev teams over the lifecycle of their applications. However, the adoption of Kubernetes also increases the complexity of delivering end-to-end applications. Cloudflow alleviates that pain for distributed streaming applications, which are often far more demanding than typical front-end or microservice deployments.

Cloudflow integrates with popular streaming engines like Akka, Apache Spark, and Apache Flink. Using Cloudflow, you can easily break down your streaming application into small composable components and wire them together with schema-based contracts. With those powerful abstractions, Cloudflow enables you to define, build and deploy the most complex streaming applications.

Streaming application requirements

Stream processing is a discipline and a set of techniques for extracting information from unbounded data. Streaming applications apply stream processing to provide actionable insights from data as it freshly arrives into the system. The growing popularity of streaming applications is driven by:

-

the increasing availability of data from many sources.

-

the need of enterprises to speed up their reaction time to that data.

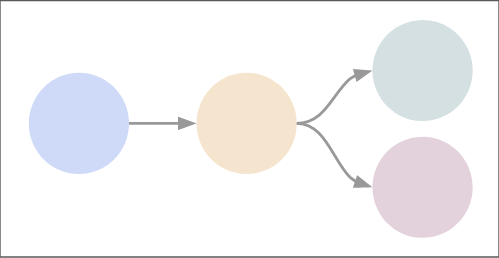

We characterize streaming applications as a connected graph of stream-processing components where each component specializes on a particular task, using the 'right tool for the job' premise. The figure below, An Abstract Streaming Application, generically illustrates an application that processes data events:

-

The first circle on the left represents an initial stage for capturing or accepting data. This could be an HTTP endpoint to accept data from remote clients, a connection to a Kafka topic, or input from an internal system in an enterprise.

-

The next circle to the right represents a processing phase that applies some logic to the data, such as business rules, statistical data analysis, or a machine learning model that implements the business aspect of the application. This processing component may add additional information to the event and send it as valid data to an external system or flag the data as invalid and report it.

-

The final two circles on the right shows the two different data output paths, valid and invalid.

Fig. 1 - An Abstract Streaming Application

Fig. 1 - An Abstract Streaming Application

Each component in the illustration presents different application requirements, scalability concerns, and often—different Kubernetes deployment strategies. Such specialized needs make even a simple streaming application non-trivial to develop and deploy. For enterprises with complex use cases, creating streaming data applications that can extract actionable business value quickly is challenging.

The Cloudflow approach

Cloudflow offers a complete solution for the creation, deployment, and management of streaming applications on Kubernetes. For development, it offers a toolbox that simplifies and accelerates application creation. For deployment, it comes with a set of extensions that makes Cloudflow streaming applications native to Kubernetes.

A common challenge when building streaming applications is wiring all of the components together and testing them end-to-end before going into production. Often, different teams are responsible for different parts of the application, making coordination difficult. Repeatedly spinning up and deleting Kubernetes pods or clusters can be both cumbersome and costly. Cloudflow addresses this by allowing you to validate the connections between components and to run your application locally during development to avoid surprises at deployment time.

Everything in Cloudflow is done in the context of an application, which represents a self-contained distributed system of data processing services connected together by data streams. A Cloudflow application includes:

-

Streamlets, which contain the stream processing logic

-

A blueprint, that defines streamlets, topics, and how they are connected and configured.

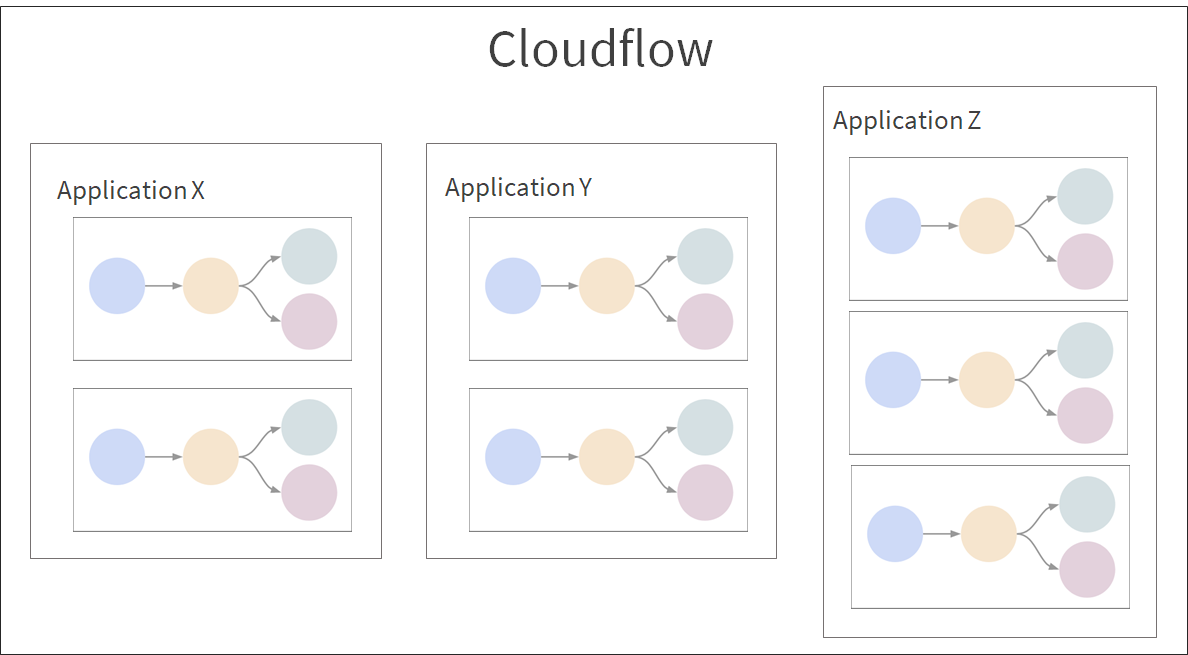

At deployment time, Cloudflow uses the blueprint to deploy all its streamlets so that data can start streaming as described. Cloudflow tools make it easy to create and manage multiple applications, each one forming a separate self-contained system, as illustrated below.

Feature set

Cloudflow dramatically accelerates your application development efforts, reducing the time required to create, package, and deploy an application from weeks—to hours. Cloudflow includes tools for developing and deploying streaming applications:

-

The Cloudflow application development toolkit includes:

-

An SDK definition for

Streamlet, the core abstraction in Cloudflow. -

An extensible set of runtime implementations for

Streamlet(s). Cloudflow provides support for popular streaming runtimes, like Apache Spark Structured Streaming, Apache Flink, and Akka. -

A

Streamletcomposition model driven by ablueprintdefinition. -

A Sandbox execution mode that accelerates the execution and testing of your applications on your development machine.

-

A set of

sbtplugins for packaging Cloudflow applications into deployable containers and generating an application descriptor that informs the deployment. -

A CLI, in the form of a

kubectlplugin, that facilitates manual, scripted, and automated management of the application.

-

-

For deploying to Kubernetes clusters, the Cloudflow operator takes care of orchestrating the deployment of the different parts of a streaming pipeline as an end-to-end application.

The default build tool for Cloudflow applications is sbt but there is support for using Maven to build as well.

|